Docker

Contents

- 1 Containers vs Virtual Machines

- 2 What is Docker?

- 3 Core Components

- 4 Container Engine

- 5 Containers

- 6 Container Image

- 7 Docker Installation

- 8 Docker Daemon

- 9 Docker (important) Configuration Files and Directories

- 10 Docker Compose

- 11 Docker Network Modes

- 12 Docker Storage

- 13 Docker Registries

- 14 Docker Logging

- 15 My Little Projects

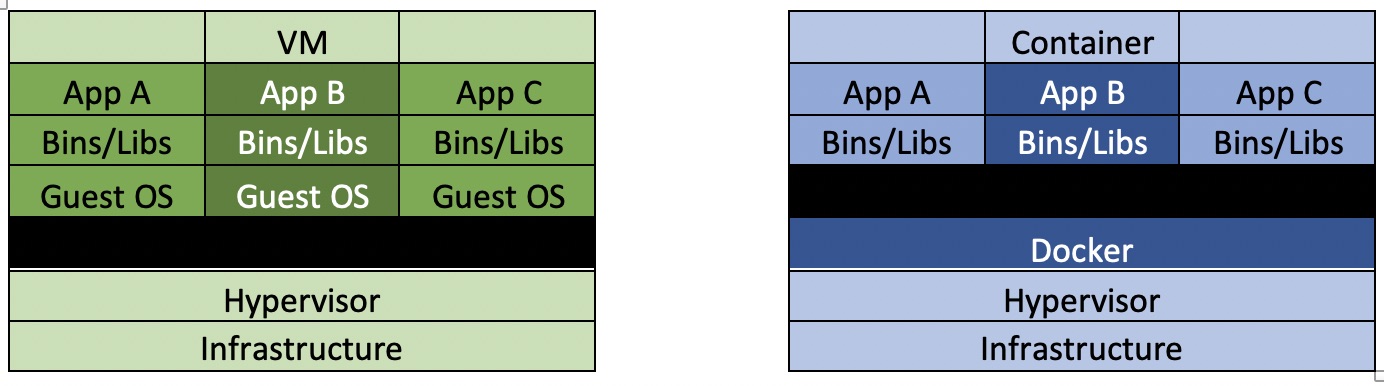

Containers vs Virtual Machines

Virtual machines (VMs) are an abstraction of physical hardware turning one server into many servers. The hypervisor allows multiple VMs to run on a single machine. Each VM includes a full copy of an operating system, one or more apps, necessary binaries and libraries - taking up a lot of GBs. VMs can also be slow to boot.

A hypervisor is a function which abstracts --isolates -- operating systems and applications from the underlying computer hardware.

While containers use the abstraction functionality from Linux and share the kernel of the host machine with other containers, in contrast, a VM runs a full-blown "guest" operating system with virtual access to host resources through a hypervisor. In general VMs provide an environment with more resources than most applications need.

VMs are thus a viable host to run docker containers on.

Virtual machines (VMs):

- Hardware level virtualization

- Heavy-weight with disk space, memory

- Slow provisioning rate (i.e. minutes)

- VM is more secure for process level isolation as it is completely isolated.

Containers:

- OS level virtualization

- Light-weight with disk space, memory

- Fast provisioning rate (i.e. milliseconds)

- Running process less secure for offering process level isolation/security.

What is Docker?

For developers and sysadmins to build, ship and run distributed applications, whether on laptops, data center VMs or in the cloud.

It can be thought of as a tool that can package an application and its dependencies in a container that can run on any server. This helps enable flexibility and portability with where the application can run, whether on premises, public cloud, private cloud, bare metal, etc.

- A software platform for the packaging of applications into standardized units called containers.

- Has containers that run in their own isolated process space.

- Supports build once, deploy anywhere approaches.

Common reasons why one may want to use Docker

- Fast, consistent delivery of your applications:

- Docker streamlines the development lifecycle by allowing developers to work in standardized environments using local containers which provide your applications and services.

- Containers are great for continuous integration and continuous delivery (CI/CD) workflows.

- Responsive deployment and scaling:

- Docker's container-based platform allows for highly portable workloads.

- Docker containers can run on a developer's local laptop, physical or VM in a data center, cloud providers, or in a mixture of environments.

- Docker's portability and lightweight nature make it easy to dynamically manage workloads, scaling up or tearing down applications and services as business needs dictate, in near real time.

- Running more workloads on hardware:

- Docker is lightweight and fast.

- Provides a viable, cost-effective alternative to hypervisor-based VMs, so you can use more of your compute capacity to achieve your business goals, allowing you to do more with fewer resources.

Common reasons why one may NOT want to use Docker

- Performance is critical to the application:

- Because containers share the host kernel and do not emulate a full operating system, Docker does impose performance costs.

- Processes running within a container will not be quite as fast as those run on the native OS.

- If you need to get the best possible performance out of your server, you may want to avoid Docker.

- Monolithic Applications:

- VMs are generally more suitable for monolithic applications.

- By just containerizing the monolith application in container, does not mean the application is now microservice.

- Security:

- VM should be used for scenarios where security concerns are outweighing the needs for a lightweight solution.

- Container breakout to the host:

- Containers might run as root user, making it possible to use privilege escalation to break the "containment"" and access the host's operating system.

- Denial-of-Service Attacks:

- All containers share kernel resources. If one container can monopolize access to certain resources-including memory and more esoteric resources such as user IDs (UIDs) -it can starve out other containers on the host, resulting in a DOS, whereby legitimate users are unable to access part or all of the system.

- Kernel Exploits:

- Unlike in a VM, the kernel is shared among all containers and the host, magnifying the importance of any vulnerabilities present in the kernel. Should a container cause a kernel panic, it will take down the whole host.

- In VMs, the situation is much better: an attacker would have to route an attack through both the VM kernel and the hypervisor before being able to touch the host kernel.

- Container breakout to the host:

- VM should be used for scenarios where security concerns are outweighing the needs for a lightweight solution.

Core Components

The primary service that Docker provides is the Docker Engine, which provides three sub-components:

- Docker Daemon

- Docker (REST) API

- A standardized set of instructions that can be used to send commands to the Docker Daemon. The API is most commonly interacted with via the Docker CLI. In Amazon ECS, the Docker API is interacted with by the ECS Agent.

- Docker CLI is a provided client for interacting with the Docker Daemon. It will send commands to the Docker Daemon via the API, which carries them out.

Container Engine

The container engine, or Docker engine, lies at the core of the container platform and listens for container API requests along with managing objects such as images, containers, networks, and volumes.

The Docker engine manages Docker objects below:

- Image

- Container

- Network

- A network is a configuration telling Docker how to connect to a container to the outside world, or other docker containers.

- Volumes

- Volumes are storage devices that can be consumed by Docker to provide storage for the container for shared data, persistent data, or better performing ephemeral storage.

However, the implementation of these objects can vary significantly. There are different plugable subsystems for managing most of the resources. By default, Docker uses the containerd runtime for managing running containers, however competing runtimes do exists such as rkt.

Similarly there are different storage drivers for interacting with different filesystems and allowing for efficient storage reuse for containers while maintaining separation.

So you should think of the Docker Daemon as an orchestration layer for a series of underlying technologies.

Apart from Docker, some other engines that are available include: LXC and rkt.

Dockerfile

Dockerfile is a script composed of various commands (instructions) and arguments listed successively to automatically perform actions -on a base image- in order to create a new image.

Each Dockerfile should begin with at least one FROM instruction which defines the parent image (or predefined term "scratch" if you build your own base image).

Each instruction in Dockerfile creates a new layer and these intermediate images are visible while building an image.

Dockerfile defines the container behavior: > FROM > RUN > CMD > ENV

Example Dockerfile:

FROM ubuntu:15.10 RUN apt-get install -y python-pip python-dev build-essential RUN pip install flask ADD app.py / EXPOSE 5000 ENTRYPOINT python app.py

- Each instruction in the image's Dockerfile corresponds to a layer in the image.

- Each layer is only a set of differences from the layer before it. The layers in Docker image are stacked on top of each other.

- When you create a new container, you add a new writable layer on top of the underlying layers. This layer is often called the "container layer". All changes made to the running container, such as writing new files, modifying existing files, and deleting files, are written to this writable container layer.

LXC:

- LXC uses the same Linux kernel features (namespaces, cgroups, etc) to provide a lightweight alternative to VM.

- It is an operating system container, compared to Docker's application containers.

- Early versions of Docker (before v0.9) uses LXC as the container execution driver.

rkt:

- rkt is an implementation developed by CoreOS that implements old App Container specifications, and is the current industry standard in Open Container Initiative (OCI).

- With OCI (https://www.opencontainers.org/), the industry has come together in a single location to define specifications around applications containers.

- OCI is intended to incorporate the best elements of existing container efforts like appc, and several of the appc maintainers are participating in OCI projects as maintainers and on the Technical Oversight Board (TOB).

Containers

A container is built from a container image which can be thought of as the blueprint for the container, with the container being the actual physical entity.

A container is a running instance of an image. It contains an ephemeral copy of the filesystem constructed from the layers in the image, a running process or processes, utilized namespaces, and other configured elements like connected volumes or networks.

Benefits Offered By containers:

The following attributes associated with a container make it an attractive proposition to develop your application:

- Lightweight:

- Docker containers running on a single machine share that machine's operating system kernel; they start instantly and use less compute and RAM.

- Images are constructed from filesystem layers and share common files. This minimizes disk usages and image downloads are much faster.

- Standard and Portable:

- Docker containers are based on open standards and run on the majority of operating systems, including infrasture such as VMs, bare-metal and in the cloud.

- Secure:

- Docker containers isolate applications from one another and from the underlying infrastructure.

- Docker provides the strongest default isolation t limit app issues to a single container instead of the entire machine.

- Interchangeable:

- You can deploy updates and upgrades on the fly.

Underlying Container Technology: Linux:

Docker is written in Go and takes advantage of several features of the Linux kernel to deliver its functionality.

Min requirements:

- Docker requires a 64-bit Linux installation

- Also version 3.10 or higher of the Linux kernel.

- The Linux Kernel provides mechanism for isolating processes in namespaces.

Namespaces:

- Docker uses a technology called namespaces to provide the isolated workspace called the container.

- Docker uses this in order to provide isolation of processes and apps from each other. This leads the process or app to think that its operating inside its own environment.

- Network Namespace: One of more important namespaces for Docker used to isolate container's networks from each other (i.e. each container can have its own network interfaces, route tables, and iptables rules).

- Example of namespaces:

- pid (Process IDs)

- net (Network interfaces)

- ipc (inter-process communication)

- mnt (filesystem mount points)

- uts (kernel and version identifiers - hostname, domain)

- user (user and group IDs for permissions)

Control Groups:

- CGroups (aka Control groups) are a Linux kernel feature which allow limiting and monitoring resource usage by process.

- It allows an admin to apply soft or hard memory, CPU, and other constraints.

- Control Groups Documentation

- Example: /sys/fs/cgroup/cpu/docker/<container ID>

[ec2-user@ip-172-31-63-97 80a282dff43fde59b15138eb629b36c548aa20aa7502689911e703cb7e90d9c0]$ pwd /sys/fs/cgroup/cpu/docker/80a282dff43fde59b15138eb629b36c548aa20aa7502689911e703cb7e90d9c0 [ec2-user@ip-172-31-63-97 80a282dff43fde59b15138eb629b36c548aa20aa7502689911e703cb7e90d9c0]$ cat cpu.shares 256

Underlying Container Technology: Windows

Windows Containers include two different container types, or runtimes.

Windows Server Containers:

- Provide application isolation through process and namespace isolation technology.

- Shares a kernel with the container host and all containers running on the host.

- They do not provide a hostile security boundary.

Hyper-V Isolation:

- Expands on the isolation provided by Windows Server Containers by running each container in a highly optimized virtual machine.

- In this configuration, the kernel of the container host is NOT shared with other containers on the same host.

- These containers are designed for hostile multi-tenant hosting with the same security assurances of Virtual Machines.

Container Image

A container image, or Docker image, is a lightweight, stand-alone, executable package of a piece of software that includes everything needed to run it, such as:

- code

- runtime

- system tools

- system libraries

- settings

It allows developers to package software into standardized units for development, shipment, and deployment. It also isolates software from other software/dependencies, which reduces conflicts between teams running different software on the same infrastructure.

Another definition: Images are a collection of read-only filesystem layers and related meta-data required to create a running container.

A container is launched by running a Docker image, and hence a container can be considered as a runtime instance of an image.

- Click here to learn how to build an image ($ docker build)

- Click here to learn how to push an image to a registry. ($ docker push)

Docker Installation

Docker has official removed support and packages for the Community Edition (CE) for RHEL 7. However, there are many customers that don't want to pay for Docker Enterprise and want to use the same free, CE version that runs on Centos 7. This is not officially supported by Docker or Red Hat, but for all intents and purposes, should work without any issues. Please see the steps below on how to do this:

RHEL 7

(From work training)

1)To install Docker on RHEL, we have to choose between installing docker.io or Docker EE. Since there is a subscription required to install Docker EE for RHEL, this tutorial is to install docker.io.

# Add non standard repository to the OS repo list: $ sudo rpm -iUvh http://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm

#Install Docker: $ sudo yum -y install docker-io

(From Karthik's way)

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo sudo yum-config-manager --setopt="docker-ce-stable.baseurl=https://download.docker.com/linux/centos/7/x86_64/stable" --save sudo yum-config-manager --add-repo=http://mirror.centos.org/centos/7/extras/x86_64/ sudo rpm --import https://www.centos.org/keys/RPM-GPG-KEY-CentOS-7 sudo yum install container-selinux docker-ce

3) Start Docker service:

$ sudo service docker start $ sudo systemctl start docker

4) Optionally create a Docker user group to provide non root users permission to access Docker daemon:

$ sudo groupadd docker

5) Add 'ec2-user' to Docker group:

$ sudo usermod -aG docker ec2-user

6) Verify if Docker daemon is running:

$ docker info

CentOS 7

1) Install required packages:

$ sudo yum install -y yum-utils device-mapper-persistent-data lvm2

2) Add repository URL:

$ sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

3) Update the OS:

$ sudo yum update

4) Install Docker CE:

$ sudo yum install -y docker-ce

5) Add 'centos' user to docker group:

$ sudo usermod -aG doker centos

6) Verify if Docker is installed by running:

$ docker info

Amazon Linux

1) Update the OS:

$ sudo yum update -y

2) Install docker:

$ sudo yum install -y docker

3) Start Docker service:

$ sudo service docker start $ sudo systemctl start docker

4) Docker daemon writes CLI commands to /var/run/docker.sock.

5) Add 'ec2-user' to Docker group to enable 'ec2-user' permission to docker daemon:

$ sudo usermod -aG docker ec2-user

6) Log out and login (exit SSH session and log back in if connected through ssh), to get the docker group permissions reflected onto the 'ec2-user'.

7) Verify if the Docker service is running by running the command:

$ docker info

Ubuntu 16.xx

Ref: https://docs.docker.com/engine/install/ubuntu/

1) Update the OS:

$ sudo apt update

2) Install required packages:

$ sudo apt-get install apt-transport-https ca-certificates curl software-properties-common gnupg-agent

3) Add secure gpg keys:

$ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

4) Verify the key (Check the docker website for the latest key as it may have changed):

$ sudo apt-key fingerprint 0EBFCD88

5) Add Docker repository:

$ sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

6) Sync the package index files:

$ sudo apt update

7) Update the OS:

$ sudo apt upgrade

8) Install Docker:

$ sudo apt-get install docker-ce $ sudo apt-get install docker-ce docker-ce-cli containerd.io

9) Optionally add the 'ubuntu' user to docker group:

$ sudo usermod -aG docker ubuntu

10) Verify if Docker is installed properly by running nginx container:

$ docker run -d nginx

11) Check if nginx container was launched:

$ docker ps

Windows

Ref: https://hub.docker.com/editions/community/docker-ce-desktop-windows

1) Download the Docker toolbox from the Docker store.

2) Save the Docker toolbox image on Windows system and start it when download is complete.

3) Follow the steps in the installation wizard.

4) Logout to complete the installation.

5) After installation completes, enable hyper-V if not enabled. You should see a pop-up window like below, click OK.

Mac OS

Docker Desktop for Mac: https://hub.docker.com/editions/community/docker-ce-desktop-mac/ Docker Installer: https://download.docker.com/mac/stable/Docker.dmg

1) To install Docker on Mac, download the Docker image from the link shown above.

2) Double click on the downloaded image which should open a pop-up window asking you to copy Docker to the Mac applications folder.

3) From the application folder, double-click on Docker and a pop-up with the following message should appear: ""Docker" is an application downloaded from the Internet. Are you sure you want to open it?"

4) Select the "Open" option and that should start docker on the Mac. You should see a Docker icon on the toolbar of Mac which should show the status of the service, and also provide options to stop or change preference of Docker application.

Docker Daemon

The Docker Daemon known as dockerd is a long running server process that listens for API requests and manages Docker Objects such as images, containers, networks, and volumes.

Similar to the ECS agent, the Docker daemon will load the configuration attributes from one of the 4 configuration files specified in the slide. We should add the required options to one of these files before the Docker daemon starts, for the options to take effect.

The server docker daemon manages:

- network

- data volumes

The client docker CLI managers:

- container

- image

Example options we can add to Docker daemon is to specify the base device size, debug level logging, storage options, networking options etc.

All available options for Docker Daemon: https://docs.docker.com/engine/reference/commandline/dockerd/

Daemon Directory: /var/lib/docker

- The docker daemon persists all data in a single directory. This tracks everything related to Docker, including containers, images, volumes, service definition, and secrets.

- You can configure the docker daemon to use a different directory, using the data-root configuration option.

- Since the state of a Docker daemon is kept in this directory, make sure you used a dedicated directory for each daemon. If two daemons share the same directory, for example, NFS, you are going to experience errors that are difficult to troubleshoot.

Configuration of Docker Daemon

There are two ways to configure the Docker daemon:

- Json configuration file (/etc/docker/daemon.json for Linux)

- using flags when starting dockerd.

Configuration of Docker Daemon during boot-up

To set configuration options for Docker daemon during boot-up, we cannot use shell-script format in user data because the script section is exceuted after the Docker daemon starts. To configure Docker daemon before the daemon starts, use Cloud-boothook format in the user data section.

- Cloud-boothook

- Cloud-boothook is the earliest "hook" available. User-data scripts run much later in the process !!!!!!!!

- Cloud boothook runs on every instance boot and its frequency can be controlled by cloud-init-per to control the frequency of execution.

The content in boothook is saved in /var/lib/cloud directory and is executed immediately.

- If we check the order of execution in /etc/init we can see that cloud-init is executed before the docker service is started. Hence passing Docker configuration through boothook will make sure the Docker configuration file is updated as soon as cloud-init detects it and before Docker daemon is started.

Example:

- A common example of passing configuration settings to the docker daemon would be the modification of the base device size. By default, docker limits the size of images and containers to 10GB. There may be some users that will need to increase the dm.basesize.

- Below we are setting the Docker option dm.basesize in the file /etc/sysconfig/docker. Once the instance is up, the docker daemon will start loading the options from this file. The same option can be passed as part of docker-storage configuration file.

#cloud-boothook

cloud-init-per once docker_options echo

'OPTIONS="${OPTIONS} --storage-opt

dm.basesize=20G"' >> /etc/sysconfig/docker

- Best practice is to use /etc/sysconfig/docker file to set Docker daemon options and use /etc/sysconfig/docker-storage file to set LVM specific options.

- After the instance boots up, the basesize option set can be verified by launching an nginx container and running the command "df -h" inside the container.

Docker (important) Configuration Files and Directories

- /etc/sysconfig/docker:

- Configured by user.

- /etc/sysconfig/docker-storage:

- Configured by programs, but can be edited by user.

- /etc/sysconfig/docker-storage-setup:

- Configured by user. Is available in RHEL atomic host and ECS optimized AMIs.

- /etc/docker/daemon.json

- Daemon configuration file.

- /sys/fs/cgroup/*:

- A cgroup limits an application to a specific set of resources. Control groups allow Docker Engine to share available hardware resources to containers and optionally enforce limits and constraints. For example, you can limit the memory available to a specific container.

- In /sys/fs/cgroup/cpu/docker, you can see the running container ID (full string).

/sys/fs/cgroup/cpu/docker [ec2-user@ip-172-31-63-97 docker]$ ls -al total 0 drwxr-xr-x 3 root root 0 Sep 9 21:53 . dr-xr-xr-x 6 root root 0 Sep 9 21:42 .. drwxr-xr-x 2 root root 0 Sep 9 21:53 80a282dff43fde59b15138eb629b36c548aa20aa7502689911e703cb7e90d9c0 -rw-r--r-- 1 root root 0 Sep 9 21:53 cgroup.clone_children . . . -rw-r--r-- 1 root root 0 Sep 9 21:53 cpu.rt_runtime_us -rw-r--r-- 1 root root 0 Sep 9 21:53 cpu.shares -r--r--r-- 1 root root 0 Sep 9 21:53 cpu.stat -rw-r--r-- 1 root root 0 Sep 9 21:53 notify_on_release -rw-r--r-- 1 root root 0 Sep 9 21:53 tasks

- Docker images are stored in /var/lib/docker/image and /var/lib/docker/overlay2 (all the upperdirs). /var/lib/docker/overlay2/l -- lowerdirs

- Docker volumes are located in: /var/lib/docker/volumes/<volume_name>/_data/

Docker Compose

https://docs.docker.com/compose/

Per: Tutorial: Creating a Cluster with an EC2 Task Using the Amazon ECS CLI, ere is the compose file, which you can call docker-compose.yml.

The web container exposes port 80 to the container instance for inbound traffic to the web server. A logging configuration for the containers is also defined.

version: '3'

services:

web:

image: amazon/amazon-ecs-sample

ports:

- "80:80"

logging:

driver: awslogs

options:

awslogs-group: ec2-tutorial

awslogs-region: us-west-2

awslogs-stream-prefix: web

Another example (from Knet lab). In this example, you are creating a Docker Compose file that creates 2 containers, WordPress and MySQL database:

version: "2"

services:

wordpress:

cpu_shares: 100

image: wordpress

links:

- mysql

mem_limit: 524288000

ports:

- "80:80"

mysql:

cpu_shares: 100

environment:

MYSQL_ROOT_PASSWORD: password

image: "mysql:5.7"

mem_limit: 524288000

When using Docker Compose version 3 format, the CPU and memory specifications must be specified separately. Create a file named ecs-params.yml with the following content:

version: 1

task_definition:

services:

web:

cpu_shares: 100

mem_limit: 524288000

Docker Network Modes

Modes:

- Bridge: a Docker-managed virtual network

- Host: containers share host's network namespace

- awsvpc: network namespace associated with an AWS ENI

Port mapping:

- Static: port mapped to host directly. 80 = 80

- Dynamic: static port mapped to ephemeral host port. 80 = 32432

$ docker network ls NETWORK ID NAME DRIVER SCOPE 86dc53b80450 bridge bridge local a8decea1c41f host host local cb822f1d004d none null local

Below are Docker's built in network types.

- host:

- Shares network interfaces with the host namespace.

- Container can interact with processes running on the host via loopback.

- Container can listen directly on a host IP address.

- Useful for "standalone" helper containers for processes running on the host.

$ docker run --net=host hello-world:latest

- none:

- Bare minimum network mode

- Only contains loopback network

- No external connectivity.

- Good for isolated containers that require no outward connectivity.

$ docker run --net=none hello-world:latest

- bridge:

- Default network mode

- Creates a brdige device (docker0) for containers.

- By default, Docker configures the bridge/docker0 using subnet 172.17.0.0/16!!! SO BE AWARE THIS CAN CAUSE ROUTING ISSUES

- Allows containers, via NAT rules, to make outward network connections.

- Containers are isolated from the host.

- Can expose ports allowing inward network connections

$ docker run --net=bridge hello-world:latest

- container:

- You can share network namespaces of other containers.

- Useful for "sidecar" containers, such as tcpdump container.

- Container will see the same network interfaces as the source container.

- Useful for troubleshooting networking issues.

$ docker run --net=container:deadbeef hello-world:latest

Exposing Ports

Given that a container will default to the "bridge" network mode, and thus not have any inbound connectivity, Docker provides a mechanism to automatically set up NAT rules to expose ports on the container to ports on the host. Unless otherwise specified these exposed ports will be placed on a random high number port.

(Docker run)

Expose container port 80 on host port 8080:

$ docker run --publish 8080:80 hello-world:latest

(Dockerfile)

Instruct Docker to expose port 8080 in this image:

EXPOSE 8080

Expose the DNS ports in both TCP and UDP from this container:

$ docker run -p 53:53/tcp -p 53:53/udp hello-world:latest

Container Network Model

Docker networking architecture is based on a set of interfaces called the Container Networking Model or CNM. This philosophy provides application portability across diverse infrastructures.

- The network sandbox, endpoint, and network are OS and infrastructure agnostic high-level constructs in the CNM that provide applications with a uniform experience, irrespective of infrastructure stack.

- CNM also provides plug gable and open interfaces for the community, vendors, and users to leverage additional functionality, visibility, and control over their networks. These are represented as the driver interfaces (network driver, IPAM driver).

Sandbox

- Contains the configuration of a container's network stack. This includes the management of the container's interfaces, routing table, and DNS settings.

- An implementation of a Sandbox could be a Windows HNS or Linux Network namespace, a FreeBSD Jail, or other similar concept.

- A Sandbox may contain many endpoints from multiple networks.

Endpoint

- An Endpoint joins a Sandbox to a Network. The Endpoint construct exists so the actual connection to the network can be abstracted away from the application.

- This helps maintain portability, so that a service can use different types of network drives without being concerned with how it's connected to that network.

Network

- The CNM does not specify a Network in terms of the OSI model.

- An implementation of a Network could be a Linux bridge, a VLAN, etc.

- A Network is a collection of endpoints that have connectivity between them.

- Endpoints that are not connected to a network do not have connectivity on a network.

Network Driver

- These drivers are for the actual implementation of the network.

- They are pluggable so that different drivers can be used and interchanged easily to support different use cases.

IPAM Driver This is the Native Docker IP management driver that provides default subnets or IP addresses for the networks and endpoints if they are not specified.

Linux Networking Fundamentals

Docker networking uses the Linux Kernel's networking stack as low-level primitives to create higher level network drivers. In short, Docker networking is Linux networking.

The tools listed below provide forwarding rules, network segmentation, and management tools for the dynamic network policy.

- Linux Bridge

- A layer 2 device that is the virutal implementation of a physical switch inside the Linux kernel. It forwards traffic based on MAC addresses, which it learns dynamically by inspecting traffic. Linux Bridges are used extensively in many of the Docker network drivers.

- A linux bridge is not to be confused with the bridge Docker network driver, which is a hgiher level implementation of the Linux Bridge.

- Network Namespaces

- A Linux network namespace is an isolated network stack in the kernel with its own interfaces, routes, and firewall rules. It is a security aspect oif containers and Linux, used to isolate containers.

- In networking terminology, they are akin to a VRF that segmenets the network control and data plane inside the host.

- Network namespaces ensure that two containers on the same host aren't able to communicate with each other or even the host itself, unless configured to do so via Docker networks.

- Typically, CNM network drivers implement separate namespaces for each containers. However, containers canshare the same network namespace or een be a part of the host's network namespace. The host netwokr namespace contains the host interfaces and host routing table.

- Virtual Ethernet Devices

- A virtual Ethern Device (veth) is a Linux netowkring interface that acts as a connecting wire between two network namespaces.

- A veth is a full duplex link that has a single interface in each namespace. Traffic in one interface is directed out the other interface.

- Docker network drivers utilize veths to provide explicit connections between namespaces when docker networks are created. When a container is attached to a Docker network, one end of the veth is placed inside the container (usually seen as the ethX interface) while the other is attached to the Docker network.

- iptables

- The native packet filtering system that has been a part of the Linux kernel since version 2.4.

- It's a feature rich L3/L4 firewall that provides rule chains for packet marking, masquerading, and dropping. The native Docker network drivers utilize iptables extensively to segment network traffic, provide host port mapping, and to mark traffic for load balancing decisions.

Docker Native Networking Drivers

The Docker Native network drivers are part of the Docker Engine and don't reuqire any extra modules. They are invoked through Docker network commands.

Host Networking Driver

- With the host driver, a container uses the networking stack of the host. There is no namespace separation and all interfaces on host can be used directly by the container.

Host Networking Mode: https://docs.docker.com/network/host/

- Containers don't get their own IP address when using host Networking mode

- Port mapping does not take effect

- Container ports are mapped directly to the host ports.

- Host networking mode can be useful to optimize performance an in situations where a container needs to handle a large range of ports, as it does not reuqire netwrok address translation (NAT), and no "userland-proxy" is created for each port. The host networking driver only works on Linux hosts, and is not supported on Docker MAC, Windows, or EE for Windows.

Bridge Networking Mode: https://docs.docker.com/network/bridge/

- User-defined bridges provide automatic DNS resolution between containers.

- User-defined bridges provide better isolation

- Containers can be attached and detached from user-defined networks on the fly.

- Each user-defined network created a configurable bridge

- Linked containers on the default brdige network share environment variables.

macvlan Network driver: https://docs.docker.com/network/macvlan/

- The macvlan driver uses the Linux macvlan bridge mode to establish a connection between container interfaces and a parent host interface (or sub-interfaces).

- Can be used to provide IP addresses to containers that are routable on the physical network.

- Additionally, VLANs can be trunked to the macvlan driver to enforce layer 2 container segmentation.

- Use-cases include:

- Very low-latency applications

- Network design that requires containers be on the same subnet and using IPs as the external host network.

- NOT COMPATIBLE WITH AWS!! The physical network needs to support multiple mac addresses on the wire, and cloud provides with virutalized networking do not support this. Youu need ot be running on a network infrastructure you control, or a provider that supports it.

Overlay Networking Driver: https://docs.docker.com/network/overlay/

- https://www.youtube.com/watch?v=b3XDl0YsVsg&list=PLvKYtbtVx5r3yf9JdQi0NOnIIYF77uFE-&index=8&t=0s

- Creates an overlay network that supports multi-host networks out of the box.

- It uses a combination of local Linux bridges and VXLAN to overlay container-to-container communications over physical network infrastructure.

None Driver

- The none driver gives a container its own networking stack and network namespace but does not configure interfaces inside the container. Without additional configuration, the container is completely isolated from the host networking stack.

- Can be useful when the container does not need to communicate with other applications or need to reach internet, such as jobs processing some potentially sensitive data.

Docker Storage

There are lots of places inside Docker (both at the engine level and container level) that use or work with storage.

You can use the command $ docker info to check the storage driver, data spaced used, available, etc.

You can use the command $ docker ps -s to get the approximate size of a running container.

$ docker ps -s CONTAINER ID IMAGE COMMAND CREATED NAMES SIZE f12321jkjfds Ubuntu "/bin/bash" 5 seconds ago upbeat_d 748kB (virtual 65MB)

- Size is the amount of data (on disk) that is used for the writeable layer of each container.

- Virutal size is the amount of data used for the read-only image data used by the container's writable layer size.

Docker storage overview:

Docker Images (Storage)

- Docker images are the base of everything. They're comprised of a stack of read-only layers wherein each layer a Docker command is recorded.

- When we start a container from an image, the Docker engine takes the read-only stack of layers and add a read-write layer on top of it where the changes are applied.

- It also makes sure that the changes in the read-write layer hide the underlying original file in the read-only layer.

- To understand what happens in the background, we need to look at the copy-on-write mechanism.

Copy-on-write mechanism

- When we run a container from a Docker image, the Docker engine does not amke a full copy of the already stored image. Instead, it uses something called the copy-on-write mechanism.

- copy-on-write is a standard UNIX pattern that provides a single shared copy of some data, until the data is modified. To do this, changes between the image and the running container are tracked.

- Immediately before any write operation is performed in the running container, a copy of the file that would be modified is placed on the writeable layer of the container, and that is where the write operation takes place. Hence the name copy-on-write.

- If this didn't occur, each time you launched a container, a full copy of the filesystem would have to be made. This would add time to the startup process and would use a lot fo disk space.

- Because of the copy-on-write mechanism, running containes can take less than 0.1 seconds to start up, and can occupy less than 1MB on disk. When compared to VMs, which can take minutes and can occupy GBs of disk space, you can see why Docker has such a fast adoption.

- You may wonder how the copy-on-write mechanism is implemented. To understand that = Union File System.

Union File System

- The Union File System (UFS) specializes in not storing duplicate data.

- If two images have identical data, that data does not have to be recorded twice on disk. Instead, the data can be stored once and then used in many locations.

- This is possible with something called a layer. Each layer is a file system, and as the name suggests, they can be layered on top of each other.

- Single layers containing shared files can be used in many images. This allows images to be constructed and deconstructed as needed, via the composition of different file system layers. The layers that come with an image you pull from the Docker HUB are read-only. But when you run a container, you add a new, writable, layer on top of that. When you write to that layer, the entire stack is searched for the file you are writing to. And if a file is found, it is first copied to the writeable layer. The write operation is then performed on that layer, not the underlying layer.

- This works because when reading from a UFS volume, a search is done for the file that is being read. The first file that's found, reading from top to bottom, is used. That's why files on the writeable layer (which is on top) of your container are always used.

- The filesystem from the perspective of your running containers reads everything from the top down, from the top layer down to the bottom layer.

OverlayFS/Overlay2 is baked into Linux and widely used. How do layers work in OverlayFS?

- Two or more layers - R/O and R/W

- New files exists on the top-most (R/W) layer only

- Files are copied to the top-most layer and written to

- Deleted files are hidden, but still exist in R/O layers

Types of Docker Storage:

Persistent Storage

- File remain available after the container is stopped.

- Data is stored on the host machine.

- Application specification files and data that is required for processing and analysis after the Container is stopped can be stored in Persistent Storage.

- Docker gives three options for containers to store files in the host machine, so that files are persisted when the container stops:

- Bind mounts (Windows/Linux)

- Volumes (Windows/Linux)

- tmpfs mount (Linux)

Non-persistent Storage

- Files are not available after the container is stopped.

- Data is stored in the container's writeable layer.

- Logging information, such as system logs or container logs can be stored inside the container which is Non-persistent data Storage.

Storage Drivers:

- Allow data to be created in the container's writable layer.

- Use stackable image layers and CoW

- Provide pluggability

- Control how images and contianers are stored and managed on the Docker host

- Docker supports several different storage drivers and each storage driver handles the implemenation differently, but all drivers use these features.

Storage Driver Options:

- overlay2

- Btrfs

- Device mapper

- ZFS

- aufs

- VFS

Volumes

- Volumes are created and managed by Docker.

- Volumes are isolated from the core functionality of the host machine (whereas in Bind Mount your bind mounting an actual filesystem path to the container that exists on the host).

- Supports the use of volume drivers, to store your data on remote hosts or cloud providers, among other possibilities. Example: Rex Ray Plugin, Netshare Plugin

To create a Docker volume, use the $ docker volume create command, or Docker can create a volume during container or service creation. Click here for more information.

Docker volumes are located in: /var/lib/docker/volumes/<volume_name>/_data/

Volumes advantages:

- Volumes are easier to back up or migrate when compared to bind mounts. And,

- Managing volumes can be done by Docker CLI commands or the Docker API.

Use Cases for Volume:

- Sharing data among multiple running containers.

- Storing data remotely on a host or cloud, rather than locally.

- Decoupling the configuration of the Docker host from the container runtime.

- When you need to back up, restore, or migrate data from one Docker host to another, volumes are a better choice.

If you start a container with a volume that does not yet exist, Docker creates the volume for you. The following example mounts the volume myvol1 into the /app/ in the container.

$ docker inspect devtest1 | jq .[0].Mounts

[

{

"Type": "volume",

"Name": "myvol1",

"Source": "/var/lib/docker/volumes/myvol1/_data",

"Destination": "/app",

"Driver": "local",

" Mode": "z",

"RW": true,

"Propagation": ""

}

]

- This shows the mount is a volume, it also shows the corect source and destination, and the mount is read-write.

Bind Mounts

Bind mounts:

- have been around since the early days of Docker.

- Bind mounts have limited functionality compared to volumes.

- When you use a bind mount, a file or directory on the host machine is mounted into a container. The file or directory is referenced by its full or relative path on the host machine.

- Bind mounts are very performant, but they rely on the host machine's filesystem having a specific directory structure available.

- In bind mount you are referencing a direct location on a host that you want to make available inside of the container. (Different from volume where we don't have to specify the location because docker knows where to create the volume).

Practical use cases for Bind mounts:

- Sharing configuration files from the host to the containers. This is how Docker provides DNS resolution to containers by default, by mounting /etc/resolv.conf from the host machine into each container.

- Sharing source code or build artifacts between a development environment on the Docker host and a container.

Caveats:

- Bind mounts allow access to sensitive files.

The $ docker run command will run a container and bind-mount the target/ directory into your container at /app/

$ docker run -d -it --name devtest -v "${pwd)"/target:app nginx:latest

9478997432979975579438f78c4699794v584c54

The $ docker inspect devtest command shows that the mount is a bind mount, it shows the corect source and destination, it shows that the mount is read-write.

$ docker inspect devtest

[

{

"Id": "93042894712937194314121083928",

"Created": "2020-04-23T20:34:54.2324324Z",

"Path": "nginx",

.

.

"Mounts": [

{

"Type": "bind",

"Source": "/root/target",

"Destination": "/app",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

}

],

tmpfs Mount

If you're running Docker on Linux, you have a third option. With tmpfs Mount, when you create a container the container can create files outside the container's writable layer.

- As opposed to volumes and bind mounts, a tmpfs Mount is temporary, and only persisted in the host memory.

- When the container stops, the tmpfs Mount is removed, and files written there won't be persisted.

Practical Use case:

- Best used for cases when you do not want the data to persist either on the host machine or within the container.

- To protect the performance of the container when your application needs to write a large volume of non-persistent state data.

- Docker swarm uses tmpfs to store secrets.

$ docker run -d -it --name -tmptest --mount type=tmpfs,destination=/app nginx:latest 324237498939b797989B979237923f $ docker exec -it 32423 df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/docker-259:4-3242342-3242341313423423252 9.8G 167M 9.2G 2% / tmpfs 1.9G 0M 1.9G 0% /app

Docker Registries

Since Docker images are layer file system snapshots that are immutable, it is often required that you will want to share or use a completed image.

When you start a task with an image not found locally, or perform the $ docker pull command, Docker will pull from a registry.

Images without a URL specified (i.e. httpd) will download from the Docker Hub. However, there are many different registries. In addition to Docker Hub, there is the public registry - Quay.io, as well as a number of private hosted registries like our ECR, and even on premise registry products like GitLab Container Registry. You can run you own registry also.

To download from a registry, you'll need the image path which is:

url/image_name:tag

Example:

quay.io/coreos/fluentd-kubernetes:cloudwatch

Registry URL: quay.io/coreos

Image name: fluentd-kubernetes

Tag: cloudwatch

- If no tag is provided Docker will default to pulling the 'latest' tag.

- If there is no repository specified, Docker will default from pulling from Docker Hub.

Docker Logging

Docker Daemon logs/Engine Logs

Since the Docker daemon manages the container's lifecycles, daemon logs may help you diagnose problems with the container lifecylce. It may also help to resolve issues related to the Docker daemon itself.

The location of those logs, however, will depend on the OS:

- For example, in RHEL/CentOS, it is /var/log/messages.

- For Debian:

- Pre-debian 8: /var/log/daemon.logs

- Debian 8 and later: use $ journalctl -fu docker.service

- For Ubuntu, similar to Debian, log location depends on version

- Ubuntu v14.10 or older: /var/log/upstat/docker.log

- Ubuntu v16.04 + newer, and CentOS: use $ journalctl -u docker.service

It should be clear that Docker Daemon messages only pertain to the operation of Docker containers, and not the apps running within each container. It's up to the container and app engineer to specify how or if app logs will be stored on the host instance.

Container Logs

Containers are ephemeral and emit logs on stdout and stderr output streams. The logs from these output streams are then stored in JSON files by default at location show below:

- /bar/lib/docker/containers/<containerID>/<containerID>-json.log

Thus applications running within containers must emit the logs on the stdout and stderr output streams to be available after container dies.

You can also view container logs using $ docker logs command:

$ docker logs <containerID> $ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 25164d177f7a amazon/amazon-ecs-agent:latest "/agent" 6 hours ago Up 6 hours (healthy) ecs-agent $ docker logs 2516 | head

Docker Debugging

There may be times when you may need to have more visibility when troubleshooting things like:

- Docker daemon startup

- Container lifecycle events such as start or stop

- Other daemon-related failures

Enabling debuggin will provide additional details in the logs which may help shed some light into what transpired and caused the failure.

- However, be careful not to leave it on after having finished your troubleshooting as debugging can cause the log size to increase significantly and fill up disk space.

Although there are a couple of ways to enable debugging, Docker's recommended approach is to set the debug key to 'true' in the daemon.json file - usually located in /etc/docker. The file may also need to be created if it doesn't already exist.

Example of what the key should look like on empty daemon.json file.

{

"debug": true

}

Once the entry has been added, the docker daemon must be restarted, which will terminate any running containers.

Enable Debugging in Docker

(ecs-optmized) by adding line below, and then restart docker:

$ sudo vi /etc/sysconfig/docker ... OPTIONS="-D --default-ulimit nofile=1024:4096"

$ sudo service docker restart

View Stack Traces

The Docker Daemon log can be viewed by using the following command:

$ journalctl -u docker.service . ..goroutine stacks written to /var/run/docker/gouroutine-stacks-2017-06-02T1033336z.log ..daemon datastructure dump written to /var/run/docker/daemon-data-2017-06-02T1033336z.log

- The locations where Docker saves these stack traces and dumps depends on your OS and confioguration. You can sometimes get useful diagnostic info straight from the stack traces and dumps. Otherwise, you can provide this information to Docker for help diagnosing the problem.

Logging Strategies

The best practice for logging includes shipping the logs from the host to a centralized logging system and configuring log rotation so that the host does not fill up the available disk space. There are several strategies available for capturing logs from Docker.

Logging via Application:

- With this strategy, applications running within the container handle their won logging using a logging framework.

- The logging framework may be configured to forward logs to a remote centralized logging system such as ELK. The benefit of this approach is that it gives developers more control over logging.

- However, application processes might get loaded down. Also, while forwarding logs from within the container, container ID will not be included in the logs, making it difficult to which container the logs came from.

Logging Using Data volumes:

- With approach you can create a directory within a container that links with a directory on the host machine and then configure your application to print logs to the linked directory within the container.

Logging Using the Docker Logging Driver:

- While the default configuration for containers writes logs to the previously mentioned files, containers can be configured to forward log events to the syslog, gelf, journald, and other endpoints. Also, there are log drivers such as awslogs and splunk available that can forward logs directly to a centralized logging system such as AWS CloudWatch. You can change the default logging driver by modifying the configuration in the Docker Daemon.

- Since the container won't read or write to the log file, you might notice performance improvements with this approach. However, you may find the containers stop if the TCP connection can not be made to the remote logging system. Also, the $ docker logs command will not display logs, since that command works with default logging driver.

- Supported Logging Drivers: https://docs.docker.com/config/containers/logging/configure/#supported-logging-drivers

- Configuring Logging Drivers: https://docs.docker.com/config/containers/logging/configure/

Logging Using a Dedicated Logging Container:

- This approach allows you to run a dedicated logging container on the host that can access logs for all the containers running on that host. This container is configured for logging purposes and does the job of aggregating, analyzing, and storing or forwarding logs to a central logging system.

- Example: https://github.com/gliderlabs/logspout

Logging Using the Sidecar Approach:

- This approach uses logging containers, but unlike dedicated logging containers, every application container will run with a separate logging container. The sidecar container can use shared volumes, reading logs from there or can run a daemon such as syslog, which will forward logs to a centralized system. This approach is helpful in scenarios where access to the host system is not available. It also allows the logs can be tagged and shipped for each application container.

- The downside of this approach is the number of required resources, since each app container will be running with a sidecar container.

References:

Install Docker on Linux

https://runnable.com/docker/install-docker-on-linux

Learn and get started with Docker:

https://aws.amazon.com/docker/

https://docs.docker.com/get-started/

https://docs.docker.com/get-started/overview/

Great intro video:

https://www.youtube.com/watch?v=eGz9DS-aIeY

My Little Projects

Mediawiki Dockerized

- Couldn't get much done by following the official mediawiki docker hub page: https://hub.docker.com/_/mediawiki

- This helped a lot: https://collabnix.com/how-to-setup-mediawiki-in-seconds-using-docker/

- Other sorta helpful links:

https://hub.docker.com/_/mariadb/

https://linuxconfig.org/mediawiki-easy-deployment-with-docker-container

mysql: (How-to) https://phoenixnap.com/kb/mysql-docker-container mariadb</b: https://hub.docker.com/_/mariadb/

Create LAMP stack using Docker Compose

Create a container for each component of our LAMP stack:

- Ubuntu 18.04

- apache-php

- database

The different containers must be able to speak to each other: to easily orchestrate linked containers we will use docker-compose.

Test later:

https://www.thearmchaircritic.org/tech-journals/creating-a-lamp-stack-using-docker-compose

https://www.hamrodev.com/en/app-development/lamp-docker-tutorial